Unresolved debates about the future of AI

How far the current paradigm can go, AI improving AI, and whether thinking of AI as a tool will keep making sense

Today’s post is a lightly edited transcript of a talk I gave a few weeks ago, at the Technical Innovations for AI Policy Conference organized by FAR.AI. The video (21 min) is immediately below or at this link; other talks from the conference are on the organizers’ YouTube channel.

Let me know your thoughts on this kind of post—should I do this more often for talks, Congressional testimony, etc, or would you rather I just post original writing? Would a full post digging into one of the debates I touch on be interesting? It’s always great to hear what you all find interesting.

Lastly: if you’re receiving this by email, note that the email may be truncated. You may want to click through to the web or app version (just click on the post title) to read the post in full.

Talk transcript & slides

The problem that made me want to give this talk today was a recurring one.

An early instance was in 2022 when we had, very close to each other, headlines about deep learning hitting a wall and then ChatGPT launching a whole new revolution.

Last year, we had coverage from the Wall Street Journal—really good reporting—about real challenges inside OpenAI with scaling up their pre-trained models and how difficult that was and how they weren't happy with the results, and then on the literal same day we had the release of o3, the next generation of their reasoning model, and François Chollet—who's famously skeptical—saying that it was a significant breakthrough on his ARC-AGI benchmark. So these very contradictory takes, both of which had some truth to them.

Then last month, two big publications that I'm guessing many folks here have seen. One making the case that AI is just a normal technology and it's going to follow the same path we've seen in many previous technologies, the other describing how we might have AGI by 2027, superintelligence by 2030, and an absolutely radically transformed world. Again, very different perspectives, both of which have some truth to them.

So basically, this talk is for anyone who—raise your hand if you’ve ever been personally victimized by the onslaught of AI news and how contradictory it is? Yeah, this talk is for you. If you didn’t raise your hand you can leave, I won’t take offense, it’s fine.

A friend saw the title of the talk and said, "Wait, how did you get them to give you a nine-hour slot to cover all the unresolved debates?" But we're just going to talk about three debates. We’re going to focus on technical debates, meaning how AI will evolve as a technology, not what are we going to do about it or how will it affect society. And debates that are the most relevant in my view or are, according to me, a helpful way of breaking down some of the disagreements.

So in other words, we're not going to talk about timelines to AGI, and we're not going to talk about p(doom), because I think both of those are not as productive.

Here are the three debates that I want to talk about: How far can the current paradigm go? How much can AI improve AI? And will future AI still basically be tools, or will they be something else?

#1: How far can the current paradigm go?

People often talk about "is scale all you need" or "can you scale language models all the way to AGI?" I don't think this is that helpful of a question, or I think it's a little bit misguided. I would rather ask: are we on a good branch of the tech tree?

If you imagine that we're exploring this tree of potential technologies to develop, and we've explored some of the paths further than others, then the current one that we're on—you can call it different things, but I'm going to call it the generative pre-trained transformers (GPT) branch of the tree.

The reason I find this more useful is that it's not really the case that we've just taken GPT-1 from 2018 or something and just scaled that. Instead, we've been adding on different architectural changes, different ways of gathering and processing data, we're now doing reasoning, we're now doing agents—but it's all continuing along the same branch of the tree, or similar branches.

So for this debate—how far can the current paradigm go?—if it can go a long way, that would mean we can keep going in this branch of the tree that we're on, and it keeps bearing fruit, so to speak, to semi-mix metaphors. Versus, maybe we need to backtrack and go on a somewhat different branch of the tech tree, or maybe we need to go a long way back and maybe not even do deep learning or do something that's very different to language models.

There are different arguments for whether we can go far or not.

Arguments for “quite far”:

Small-to-medium improvements. One really important one is just recognizing that all of the progress we've seen over the past 10-15 years has not come from big paradigm shifts or big breakthroughs, but instead these different small- to medium-size improvements. Looking back to 2015-2016, starting to get reinforcement learning to work on games, figuring out how to combine it with tree search and beating human performance on Go; the transformer, which was a big deal, came out in 2017—the basis of all of our current language models—that was a big improvement, but not a paradigm-shifting improvement on LSTMs (long short-term memory networks); there’s a long history of these small to medium improvements, so we might keep seeing a lot of progress from that.

Finding new things you can scale. People often say with scaling, "do we just need to scale what we have?" But if you talk to the people inside AI companies who are doing this, the people doing the research, they don't think about just dialing up the scale knob. Instead, they think of a big part of their job as finding things that you can scale, finding things where if you dial up the scale knob, you get good returns. And if you talk to people inside those research teams right now, they think they have quite a few new things that they can keep scaling that we're at the beginning of. Things like reasoning training, multimodality as a new source of data, figuring out ways to train agentic capabilities at scale. We need things where you can turn the scale dial up and get good returns, and it looks like we might have a few right now that we're still on the early side of.

Adoption —> data flywheel. Another thing to be keeping an eye on: for the companies and research teams doing this, data is very valuable, and one really great way to get data is to have real-world adoption that you can then use to train your future systems. So as language models and technologies on the GPT branch of the tree are starting to be useful and starting to be adopted, that means we're going to get more of that flywheel, more of that reinforcement.

There are also reasons to think that the current paradigm won't get us that far or won't keep going that much further.

Arguments for “not much further”:

Intractable issues. There are some really big issues that have repeatedly caused problems for GPTs and that are still unsolved today. These include hallucinations, also known as confabulations—just making information up. Reliability—there's this capability-reliability gap where you can show that an AI system can do something very impressive (capability), but if it doesn't actually do that 90, 95, 99% of the time (reliability), it may not be that useful, depending on the application. We really haven't seen anything nearing really high reliability on some of the most sophisticated tasks. Overconfidence—another big problem for these models. You ask a question, they have no idea when they should say "I don't know" or "I know." They're not very good at recognizing if they've made a mistake and backtracking. Maybe they're getting better, maybe these won't be intractable forever, but they have been harder than some people would expect.

Fundamental limitations. There are also fundamental limitations to the way that we build GPTs right now. A big one is they can learn things that are in their training data when they're trained in this one big chunky way, and then you can put things in their context window for any individual use case, but we don't really have good ways right now of doing something in between. Having an AI system that you're using for some task at work, it does it a bunch of times and it gets better over time, it remembers that six months ago you had some conversation and it didn't seem relevant then but it's relevant now—it can go back and look at its notes, or whatever. We really don't have this sort of setup. People are trying things where you can build a knowledge base and get the AI to change its knowledge base, things like that, but it really isn't an inherent part of the way that we build AI right now, and it's not really working just yet. Likewise, lack of a physical body. Most of the AI systems we're using right now are not embedded in physical systems. There's definitely some interesting progress happening on robotics, so again, maybe this will change, but for now, I think it is a pretty significant limitation.

Pre-training scaling slowing. It does seem actually pretty clear that the improvements we're getting from just scaling pre-training—that initial phase of learning to imitate text or other kinds of data—it does seem like the value of continuing to scale that is slowing a little bit. It's also at a point where continuing to scale it means spending hundreds and hundreds of millions of dollars. So if the value is not worth hundreds and hundreds of millions of dollars, it's going to maybe be difficult to keep moving up that curve.

Everything on both sides of this ledger is pretty unclear, it’s uncertain how good are those arguments on the left-hand side, how good are those arguments on the right-hand side. I don't want to claim any of it is 100% solid. But I think it's all worth taking at least somewhat seriously.

I think how people interpret this chart is a pretty good encapsulation of the debate.

For anyone who hasn't seen it, this is a chart from an organization called METR, which is a really excellent third party AI evaluator. They created a dataset of tasks that take a different amount of time for humans to do. Then they measured how well can different AI systems perform those tasks and how long are the tasks that they can do well. The idea being that if you can perform a task that takes a human an hour, that's going to be a harder task than something that takes a human 20 seconds. You see this curve over time where the length of tasks they can do is doubling every seven months. Actually, recently, I think more recent data has maybe shown it curving up.

Some people look at this and say this shows AI can do longer and longer tasks. If you extrapolate the curve, you get something like AI being able to do tasks that take a month by 2030. That would be very complicated, or much more complicated than AI can do right now.

Other people look at this chart and they say, well, but look, it's a 50% success rate. So GPT-4 can do something like a five-minute task, but it only gets it right 50% of the time. That's not actually practically useful, and if you make the same chart looking at 90% success rate or 99% success rate, it looks much less impressive. Also, these tasks are divorced from context. They have to be, in order to run the test—they have to be these bite-size, isolated little chunks. And they're only software and reasoning problems, they're not a broader set. So a different perspective on this chart would be to say it doesn't really tell us much at all, it's only this one specific type of progress on this one specific type of problem. And again, I think there's something true to both perspectives, and only time will tell what the answer is.

#2: How much can AI improve AI?

The second big debate that I think is pretty core to a lot of other disagreements is how much AI is going to be able to improve AI.

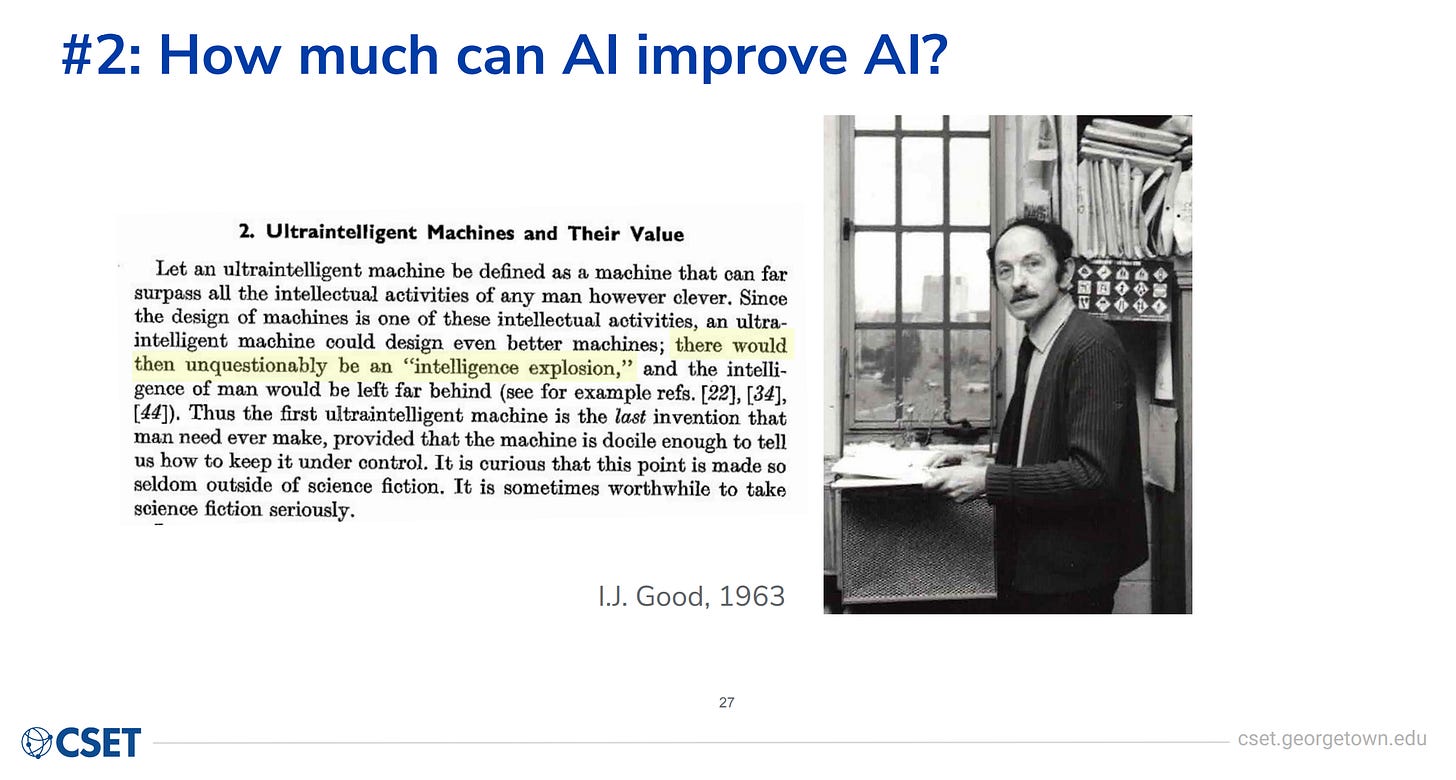

This original idea was maybe first pithily expressed by I.J. Good, who was an early computer scientist who worked with Turing. He wrote this interesting paper about ‘ultra-intelligent machines’—I'm waiting for that term to make a comeback.

Good says, if we had an ultra-intelligent machine that exceeded human performance on all tasks, then it would therefore be better at building more ultra-intelligent machines. So then "there would then unquestionably be an intelligence explosion, and the intelligence of man would be left far behind." He doesn't say anything about the intelligence of woman, so we're good. Just kidding.

But this is the basic idea. If you can use AI to improve AI, and the AI gets better than the humans at improving the AI, then you get this hockey stick curve feedback loop situation.

And this is already actually starting to happen. Good was talking about this like 60 years ago, but now just the last few years, we're starting to see really meaningful signs of this.

AlphaEvolve is a result from Google where they use their cutting-edge language models as part of a system to improve algorithms—evolutionary discovery of better and better algorithms. That system managed to accelerate the training of the LLMs that were in the system, so it was kind of improving itself. I think they said they sped up the training of a Gemini model by 1%, and when you're talking about hundreds of millions of dollars, 1% starts to be real money.

There are also early findings from a former colleague of mine,

, who does really great work on this. She's doing some informal surveying of machine learning researchers and engineers, and she said based on a number of conversations that they were already finding that AI was helping them in their research and engineering work. Junior folks were seeing somewhere between a 10-150% increase in productivity due to using AI tools. Senior researchers somewhat smaller but still meaningful, a 2-30% increase.Then just as I was preparing for this talk, I found this pretty interesting statistic. Claude Code is an Anthropic tool that helps developers code (using Claude, hence the name). This is the lead engineer on that project, and he said on a podcast that of the code underpinning Claude Code itself, the percentage of that that was written by Claude was “probably near 80[%].” So a very large proportion of this coding tool was written by the AI that is powering the coding tool.

But still, we don't actually know how much further this will go. So there’s a question—if the AI can do everything that AI researchers and engineers can do, then you naturally get this feedback loop where everything is just limited by, for example, the available computing power. But if you hit other bottlenecks, if there are other things that will slow things down, then you might not get that kind of hockey stick recursive growth.

Some potential bottlenecks:

Errors needing human review: Right now, the big one is that the coding tools (or research tools, or any AI being used for AI research) makes errors, and so you need to check it. This is a big reason why the productivity that those researchers were reporting on the previous slide is not that high yet. They still need to be looking at everything the AI is doing, running tests, checking the tests work, etc.

Research taste/judgment: In the future, maybe there will be fewer errors, but maybe if the AI is just writing code or executing pretty simple instructions, then it doesn't necessarily have the kind of research taste or judgment that you need in order to really automate that full research and engineering cycle.

Real-world experiments and/or deployments: And then even if you could automate that, maybe there are real-world experiments that you need to run, or real-world deployment—maybe you need to actually put it into a real organization or a real company in order to see what works, what doesn't, and get that feedback.

So those could all be bottlenecks. But then again, how sure are we that all of these bottlenecks can't be automated? I'm not 100% sure. I think it's a little bit of a wait-and-see situation. Time will tell which of these are really bottlenecks and which of these can actually be automated.

#3: Will future AIs still basically be tools, or something else?

The third disagreement is about future AI systems and whether it will make sense to think of them as tools.

The paper AI As Normal Technology, which I really recommend, is by

and at Princeton, who write the AI Snake Oil blog. It's very rich, it has a lot of really nice articulations of positions that I think many people intuitively hold about AI but haven't necessarily expressed. They say: "We view AI as a tool that we can and should remain in control of."Another famous skeptic of AI risk, Yann LeCun, has expressed a similar perspective: “Future AI systems […] will be safe and will remain under our control because *we* set their objectives and guardrails and they can't deviate from them.”

I think it's really important for anyone who doesn't think of AI this way, or doesn't think of future more advanced AI systems this way, to just spend some time sitting with the fact that this really is a very reasonable starting point. It is the way pretty much all AI systems work today, it's the way that most technologies work, and it also is something that we have experience dealing with.1

So, if it does seem like AI is going to continue being a tool where we determine how it's used—you open up your ChatGPT app, you type a question, it answers you, you close the app, we're done—then it means that things are going to be quite a lot simpler in many ways. If this is true, then we don't really need to spend too much time worrying about the goals of the AI system, what values it has. It's still somewhat relevant; if you ask a question about some political question, you still care what sort of responses you're getting, but that's less a question of the nature of the AI itself and more just about content.

Likewise, if AI systems continue to be tools, then people are going to be really central. It's not about what does the AI want, what is the AI going to do; it's about who is using the AI, and what they are going to do with it. Maybe we still worry about malfunctions or failures, but it's still much more comparable to past technologies.

But—I think there are some things about AI that are quite different from past technologies.

One is this idea, which you'll hear from researchers quite a lot, that AI systems are grown, not built.

What they mean by this is in contrast to, say, a smartphone, where the smartphone is built up of these little components, we put them together, and we really understand at a very detailed level how each of those components work and how they fit together to make a smartphone that does all the complicated things a smartphone does.

When it comes to AI, machine learning systems, deep learning systems, large language models—that's really not how they're developed at all. Instead, we have these algorithms that we run over data, using mathematical optimization processes that tweak all these billions or trillions of numbers, and end up with something that seems to work. We can test it and see, what does it do, how does it seem to behave, but we really don't have that component-by-component understanding that we're used to having with technologies. That makes it something a little bit more akin to an organic system—a plant, an animal, even just a bacteria—as opposed to something that we construct piece by piece ourselves.

There's also something really unusual about AI systems that is just starting to pop up over the last year or two, which is this idea of situational awareness. This isn’t about consciousness or any big philosophical concept; situational awareness is just: does the AI seem to be able to account for the situation that it itself is in?

Something we're starting to see is AI systems that notice and remark on the fact that a testing situation that they're put in, some strange hypothetical, seems like it's a test. So then, if they can tell that they're in a testing situation and they behave differently because they think they're in a testing situation, it kind of obviates the point of the test. We're very not used to technologies behaving this way. This is really not what your smartphone is going to do if you're running, say, some test to make sure that it survives in water, it's not going to be like, "Oh, I'm being tested, so I'll behave differently in the water than I would otherwise." This is really not usual when we think about technologies that are tools.2

A final one, which is something for the future, is that there are very strong financial/commercial incentives to build AI systems that are very autonomous and that are very general. Systems that you can give big picture, high-level, long-horizon tasks, complicated tasks, and let them go out and do them autonomously without much review, without too many constraints.

It may turn out that it's not feasible to build AI systems that work that way. Or it may turn out that we are able to decisively act and decide that we don't want to build AI systems that work that way. But I think the default path we should expect is that there are really strong incentives to do that, and so if it's technically possible, and we don't have some kind of significant intervention, those are the kind of systems that we're going to get.

And if that's right, and you have very autonomous, very general systems that are operating in complicated contexts, then it's not really going to make sense to think of them as tools.

One quote I found helpful here was from

, who is a co-founder of Anthropic, he leads policy there. This is an excerpt from a longer piece that I thought was very thoughtful on his Substack, ImportAI; he wrote (emphasis added):We should expect volition for independence to be a direct outcome of developing AI systems that are asked to do a broad range of hard cognitive tasks. […] We are not making dumb tools here - we are training synthetic minds. These synthetic minds have economic value which grows in proportion to their intelligence.

What I like about this quote is it's not saying we're going to make people, they're going to be conscious, they're going to have feelings—maybe some of those things are true, maybe they're not, but that's not what we're talking about here. What Jack is talking about is just, the fact that we're training systems to do difficult, economically valuable things in very general-purpose, open-ended ways means that we could get these strange-seeming behaviors that really don't characterize a tool. We could see those in AI systems, and again, we're already starting to see initial glimmers of that.

I think these are reasons to expect that if the technology keeps progressing, it may stop looking like a tool and look like something a little bit different, maybe more like, people will sometimes say, a ‘second species.’

A final thought for this third debate: if one side is “it's going to just clearly be a tool,” the other side is “it's going to be something like a second species,” an intermediate position that perhaps we're starting to see already could be to think of AI as an optimization process, maybe a self-sustaining optimization process, of the kind that maybe a market is or a bureaucracy is. It's not a tool, it's not something that you just pick up and use and then put down and it stops doing anything; it's also not necessarily a being or a mind; but it is still very powerful and it does make sense perhaps to think about what it is optimizing for, what kind of goals it has, what it might be likely to do in the future, as opposed to just being a tool. I think this is maybe a little bit less sci-fi-sounding way of thinking about this spectrum of possibilities as well.

Conclusion

That is where I'm going to leave it today. It looks like we have time for a couple questions. I hope that these three debates are a helpful way of breaking down some of the intractable and sometimes unproductive debates in the space.

Though see e.g. this paper (h/t Seth Lazar) making the case that even smartphones, which I would intuitively had said are clearly tools, are better thought of as symbiotes or even parasites with humans.

Modern smartphones are designed to manipulate the attention and behaviour of users in ways that further the interests of the corporations that built them. […] It is not plausible for a part of the cognitive system to be designed to thwart the goals and desires of the user in the way a smartphone is. Given this, we argue, modern smartphones are better understood as external to, but symbiotic with, our minds, and, sometimes even parasitic on us, rather than as cognitive extensions.

It is, however, something that we’re used to seeing from organizations, as in the VW emissions scandal. The company configured its engines to activate emissions controls only during controlled laboratory tests, then emit far more in regular use.

Really interesting. Kind of hard to have so many questions and so few answers but I guess that's where it's at.

Great talk for a broad audience. I'm very aligned with your points.